This summer, I was fortunate to participate in the GitLab GSoC program, and work with the security team. I had a blast and I want to share my experience with you!

Special thanks to my mentors! Dennis is my direct mentor who supported me daily over the project developing. Ethan manages the Security Research team. He kept track of our progress and give much technical & non-technical help. I appreciate their kindness and guidance to help me succeed with the project.

Also, I want to thank all colleagues of the Security team. Their profession and friendliness shows the charm of GitLab culture.

Package Hunter is a GitLab open source tool for detecting malicious code. It saves developers’ valuable time to check third party dependencies’ reliability, and to maintain application security.

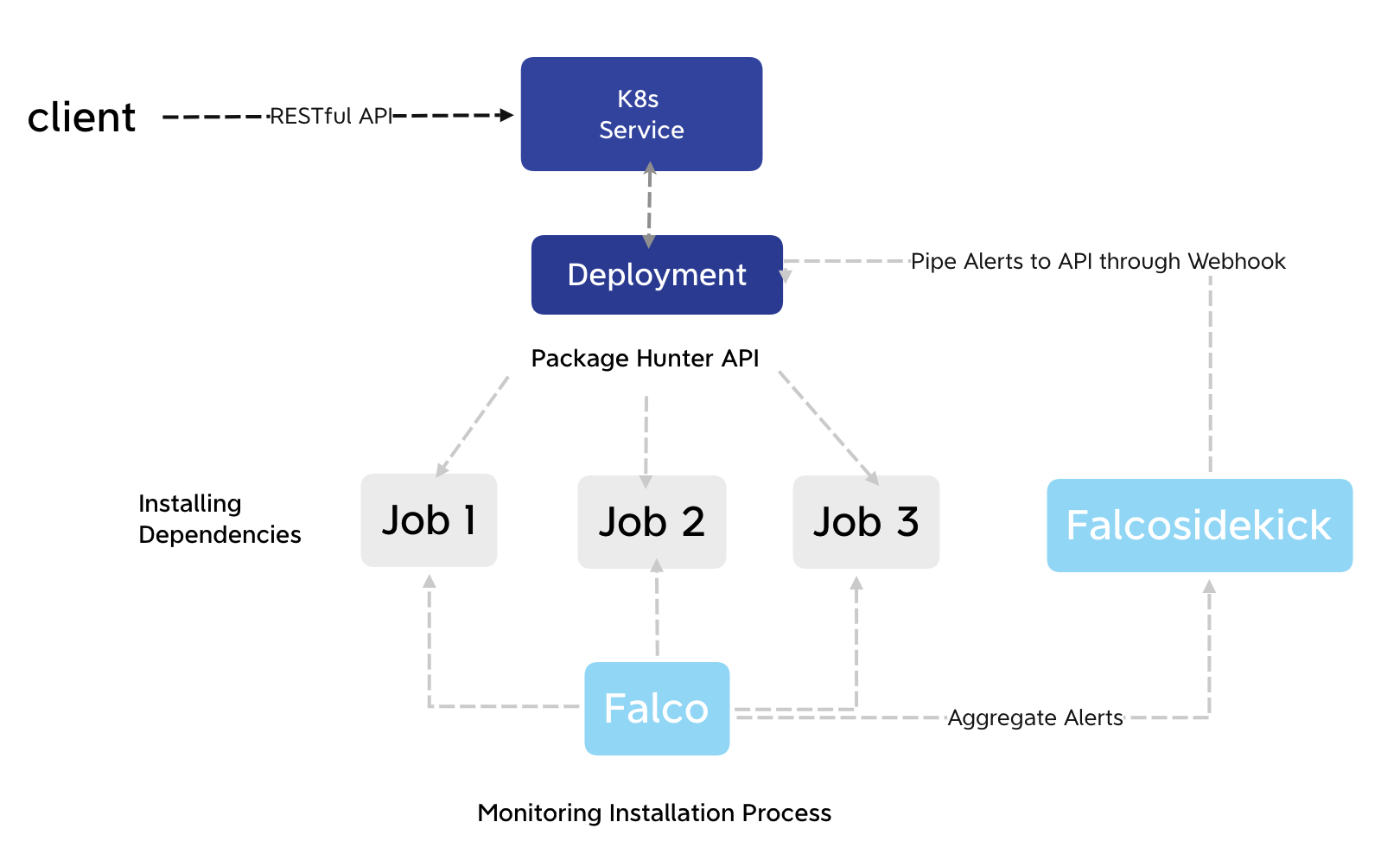

Before my project, Package Hunter was run in a virtual machine, and was called by post request with source code in the request body. Then a Docker container would be set up on a host, the source code would be copied into the container, and the installation command for dependencies would be run. During installation, Falco is used to check the behavior of dependencies against a custom rule set and then sends alerts via its gRPC API.

My project of GSoC aims to bring Package Hunter to Kubernetes as a service/deployment. By deploying it on the Kubernetes cluster, there would be some even more obvious improvement:

Provide a native environment for Falco monitoring/alerting

Run Package Hunter in a stable and scalable manner

Decouple the system into individual components

Improve Disk & Time consuming by K8s features

Therefore, the introduction of Kubernetes would grant Package Hunter more robustness, flexibility and portability.

ORIGIN: Gitlab-GSoC-2022-Project

STACK: Kubernetes, Node.JS, Helm, GKE, Falco, GRPC

DELIVERY:

During the project, we found there are several things that needed to be covered:

First, we want to keep a consistent user interface to reduce the learning curve from the user side.

Second, backward compatibility is important. Though we were migrating Package Hunter to Kubernetes, the Docker version may still be useful for local development and current GitLab CI. We took the timeto provide both approaches with the least redundancy.

For future extensibility and maintenance, we also brought flexibility to support more programming languages and package managers potentially.

To reach these goals, we worked carefully on code refactoring. We managed to split the origin complex codebase into multiple layers that are loosely coupled with each other. Besides, we also reached a consistent coding style to ensure any collaborations on the same standard.

Apparently, there are always unexpected challenges from time to time. Thanks to my mentors for their patient guidance and warm support, we conquered them and achieved success. I will be always grateful for this GSoC experience, which taught me far more than programming skill itself.

Prepare the cluster as GKE. Deploy Falco stacks through Helm Charts.

To reach fault-tolerance and scalability, we run our API service in the form of a K8s Deployment.

For load balancing, we set up a K8s service as a bridge.

For simplicity, we construct the data in the plain JSON form.

Even though GSoC is over, there is still big improvement space for Package Hunter.

For Package Hunter, we can introduce a Kubernetes Ingress. This would help to bind a GitLab internal DNS with the service, which can bring convenience for access permission and routing flexibility.

As for how to fetch the source code for detection, we may introduce Kubernetes Persistent Volume. The volume would be mounted to both the deployment and job, so they can read / write it as local disk. This can reduce unnecessary I/Os and speed up the job.

In addition, a standalone place to store cache alerts would grant scalability for Package Hunter. In case we may have more replicas in the future, they can directly get alerts from the cache. Reddis can be a good candidate.

We welcome anyone who have passion for the open source world to contribute to the amazing community! See more on our Contributing Guide.